I launched an AngularJS redesign on a large-scale ecommerce site in late 2015– just two short weeks after Google announced the death of the Ajax crawler. As we launched there was no documentation on how a Googlebot would understand that site. We knew we didn’t need escaped fragment URLs, but did we need to pre-cache #! versions of URLs? Our team didn’t have the bandwidth and our system couldn’t handle the tech debt pre-caching would create.

Basically, Google had just told us they ‘could’ understand our pages, but we had no guarantee they would or how consistently they would.

The project was named Space Party. We knew we built a fast, user-friendly site with great performance. We didn’t know much else. There’s not even a lot of documentation 9 months later.

Here’s what I learned. If you’re not too technical, don’t worry. We’ve put the big takeaways in bold.

What is AngularJS?

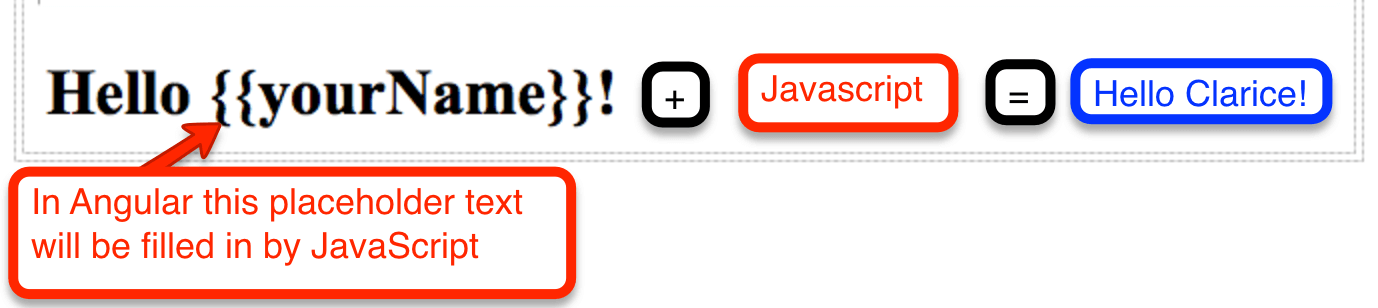

AngularJS is a JavaScript-based structural framework. Instead of writing code for each page, AngularJs lets you create a template. This template uses JavaScript to dynamically render the HTML that fills in the template.

Takeaway: Angular is a template for your content on initial pageload. Javascript fills in the content (a process called rendering) in the browser.

The Trouble with AngularJS

The initial page load looks like a template. There’s bracketed placeholders where content like the H1 should be. AngularJS renders the content in the browser. You write the template, the template tasks to Angular and Angular updates the page. It’s the biggest weakness and raises the questions– how will Google understand my page if they’re just seeing a template and the content lives elsewhere?

Until late 2015, Google recommended pre-caching a version of the page with the content filled in. Then they announced it was no longer necessary. The community had strong opinions, but Google had little official documentation.

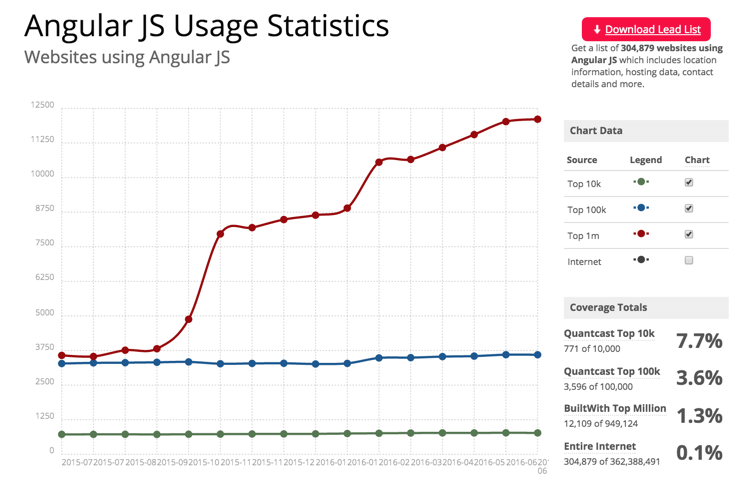

To date, 0.1% of sites are using Angular.

Takeaway: Yeah, we can launch a monkey into space. Now who wants to be the first monkey?

What SEOs Need to Know About Angular

Escaped Fragments

Prior to October 2015, Google didn’t have the capability to crawl and render dynamically created content. They required sites using AngularJS to create snapshots of the HTML content. Developers literally had to create two versions of every page and reference them in the tags.

Early adopters had to jump through bizarre tasks like _escaped_fragments_ urls and AJAX directives. Industrious developers crafted clever resources to pre-render dynamic content. Luckily times have changed. Google is generally able to render and understand your web pages like modern browsers. Google announced the they were deprecating the AJAX crawler in late 2015.

Takeaway: If you’re building a new site or restructuring an already existing site, simply avoid introducing _escaped_fragment_ urls.

Pre-rendering

In a perfect world, I would have loved a pre-rendering safety net like Prerender.io. Like many teams, we didn’t have the bandwidth or resources to handle the tech debt.

Pre-rendering requires building and storing a version of the page for bots to interact with. One of Google’s biggest initiatives has been to perceive the internet as a user does. A separate version moves the search engine away from this goal. They’ve gone as far as to discourage it– stating misuse could be seen as cloaking.

Q: “I use a JavaScript framework and my webserver serves a pre-rendered page. Is that still ok?

A: In general, websites shouldn’t pre-render pages only for Google. . . If you pre-render pages, make sure that the content served to Googlebot matches the user’s experience, both how it looks and how it interacts. Serving Googlebot different content than a normal user would see is considered cloaking, and would be against our Webmaster Guidelines.” – Google Blog

Takeaway: Pre-rendering provides a sense of security but may be more trouble than it’s worth.

History API pushState()

The development team agreed that #! were not ideal. (Everyone loves a clean URL.) As an SEO, these added unnecessary complexity to the the site. We used History API PushState() to update the visible URL in the browser. Our sitemap included all canonical URLs and we submitted it to Google.

We survived. In the spirit Angular, our simple clean tactic worked. Google recognized the content and our user-interaction data began to improve.

Takeaway: History API pushState(): Google recommended, site approved.

Googlebot crawls JavaScript– As long as you don’t block it

Well, most of the time.

“Disallowing crawling of Javascript or CSS files in your site’s robots.txt directly harms how well our algorithms render and index your content and can result in suboptimal rankings.” – Google Blog

Takeaway: Good SEO is built with a pinch of distrust. Always check production.

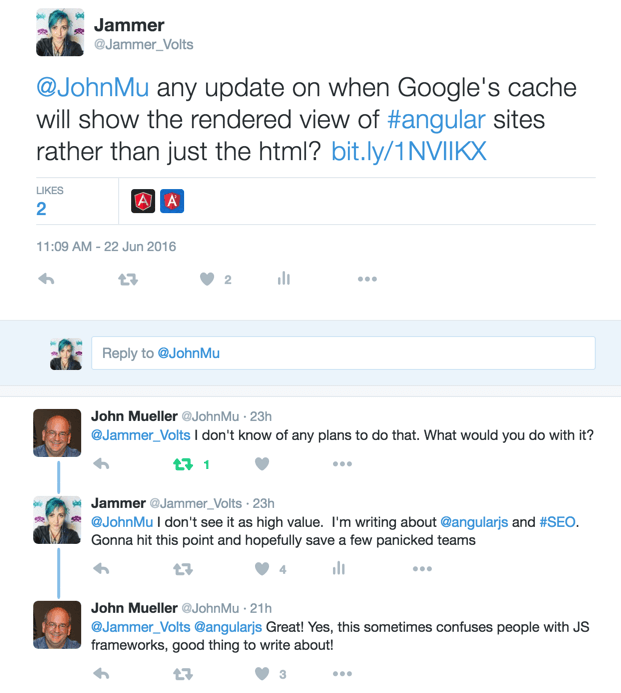

Google’s cached page will look horrifying.

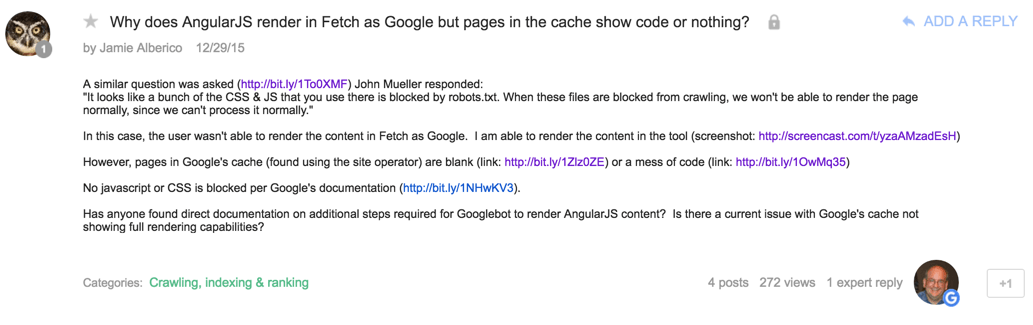

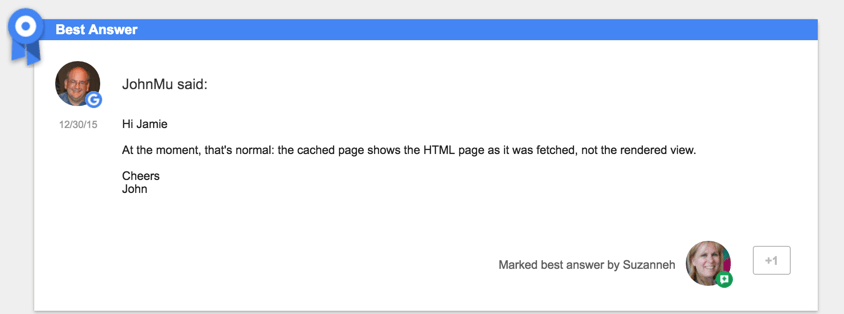

You may see squiggles of code or your may see nothing. None of your Javascript or CSS is blocked. Don’t panic. That’s ok. Good guy John Mueller, clarified in the Webmaster Central Help Forum.

How to View the Cache

The cache’s content isn’t actually blank. It’s blocked visually when in full view. There’s two ways around this.

- You can disable CSS in your browser

- Add &strip=1 to the end of the webcache URL to view the Text Only Version: https://webcache.googleusercontent.com/search?q=cache:www.example.com&strip=1

This isn’t likely to change. Even though Google understands the confusion, Angular is evolving rapidly. Devoting development work to a cache that showed rendered content isn’t worth it. Google and SEOs have bigger things to focus on.

Takeaway: You can (and should) still check for pages in the cache, but don’t rely on it for design or content checks.

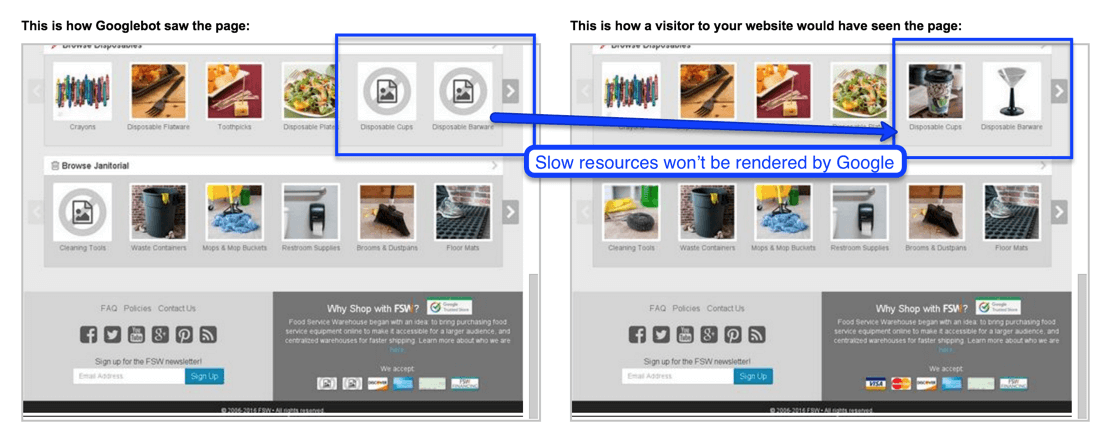

Use Google Search Console’s Fetch and Render

Look page by page with both user agents (desktop and mobile smartphone) to see what Google sees and what they think users see.

Learn how to use Fetch and Render with Google’s handy step-by-step tutorial.

Speed Matters

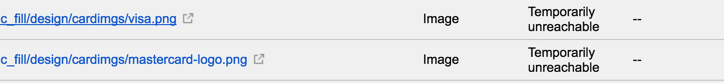

If a resource takes longer than 4 seconds, Google won’t render it.

Keep an eye out in Fetch and Render for Temporarily Unreachable resources. I mean– you chose Angular so you’d have a fast site. Keep it good guy with resources available in under 2 seconds.

Takeaway: Temporarily Unreachable means too slow.

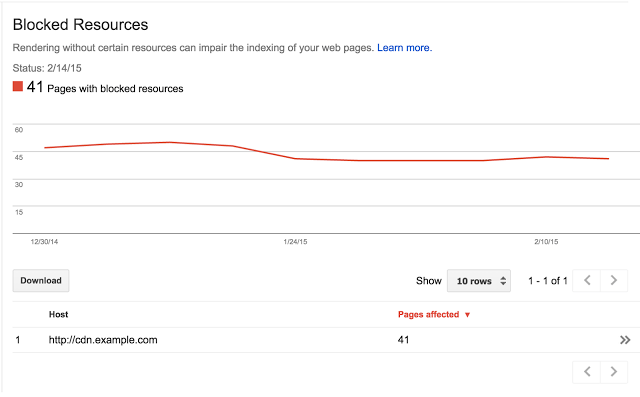

Check GSC’s Blocked Resources Report

Blocked resources can be a serious issue.

“An update to Fetch and Render shows how these blocked resources matter. When you request a URL be fetched and rendered, it now shows screenshots rendered both as Googlebot and as a typical user. This makes it easier to recognize the issues that significantly influence why your pages are seen differently by Googlebot.” – Google Blog

Takeaway: Love your resources like your rendering depends on it.

Screaming Frog Hasn’t Caught Up

UPDATE: Screaming Frog 6.0 codename Render-Rooney, includes rendered crawling.

@Jammer_Volts Yes 🙂

— Dan Sharp (@screamingfrog) July 28, 2016

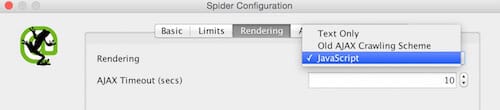

Chances are you use a web crawler like Screaming Frog to make sure the live site looks like you’d expect. If you find a crawler that works really well, please leave a link in the comments.

Pro-tip: Use Screaming Frog’s custom search to look for placeholder values. These can be placeholders like {{price}} to denote a product page or {{video title}} to look for pages with videos.

Takeaway: New cool tools will be here someday, but for now you have to get clever. Use Screaming Frog v6.0 to crawl your site. You’ll need to set your rendering preferences under Spider Configuration >> Rendering.

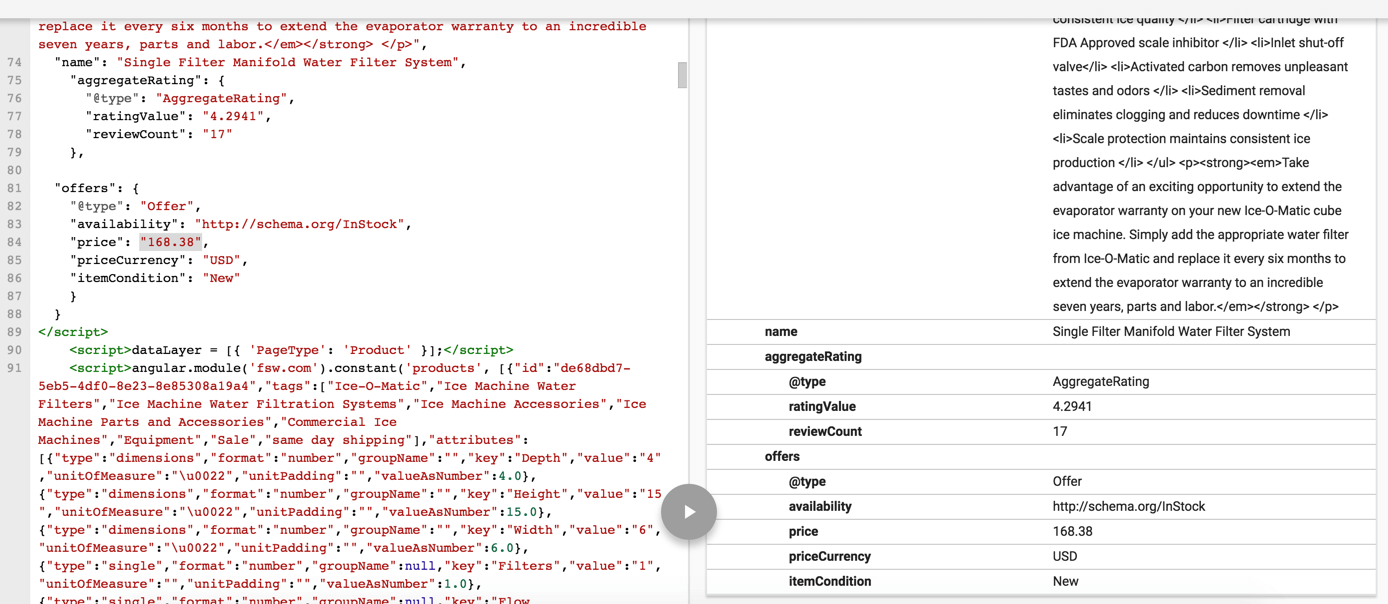

Google’s Structured Data Testing Tool Still Works

Pro-tip: Don’t mix your markup languages. JSON-LD is king.

In Conclusion

If you want to build a great site, you need to shoot for where users will be by the time your release. We started with a badly broken site built on years of patched code. The site redesign Space Party got it’s name from our humorously clumsy site search.

Thanks to Cary Haun and Brii Nicols for illustrating our site search folly

Google’s guidelines aren’t written in stone. Angular can create a better and faster web experience for users. This is what Google strives to promote. They’ve broken down their barriers to AJAX content. Angular and search will continue to evolve.

This evolution requires SEOs to stay on their toes. Site launches have changed and SEO considerations are critical when building a new or redesigned AngularJS site.

Much love to the Space Party Team for their hard work and to Damien Brown for his help in understanding the big questions SEOs ask when they tango with Angular.